Mobile Spatial Attention: Open Wearable Eye Tracking for Human–Machine Alignment

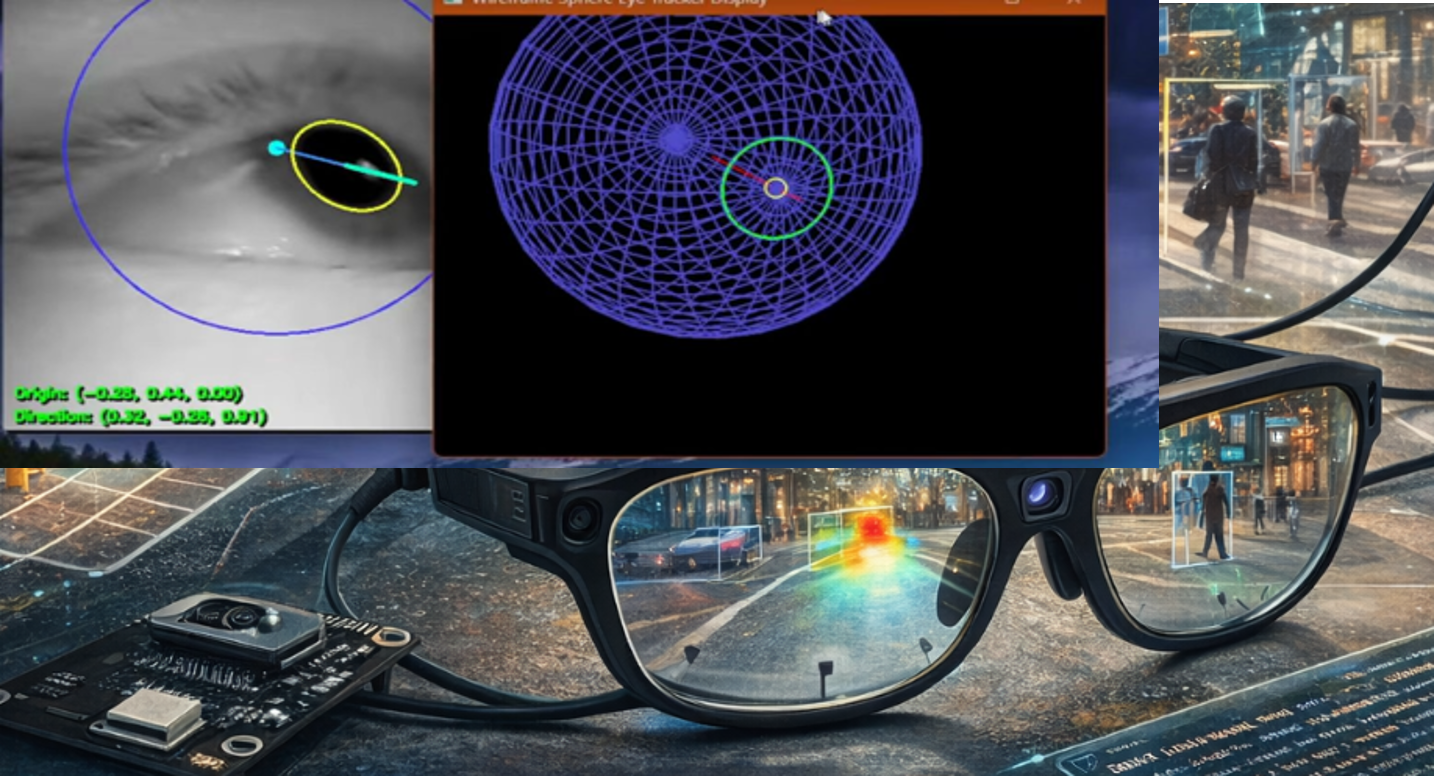

Mobile spatial attention as a mechanism for human–machine alignment.

This project investigates how attention is organized in real mobile environments. I develop an open and extensible wearable eye-tracking system to measure spatial attention in the wild, map gaze into 3D semantic space, and study whether human attention can serve as a supervision signal for safer and more interpretable machine attention.

Motivation

Attention research has a long tradition in controlled laboratory settings. However, when people move through real urban spaces, visual input, body motion, task goals, and environmental semantics interact continuously. In this project, I focus on the spatial organization of attention in the wild, aiming to characterize its structure using quantitative spatial metrics rather than qualitative description.

At the same time, robotics and autonomous driving systems often rely on internal attention maps. Whether these machine attention patterns are aligned with human attention remains unclear, and the answer matters for safety, failure diagnosis, and interpretability.

Research Questions

How is attention organized in space while moving? I ask whether attention exhibits stable spatial structure across routes and participants, whether there are repeatable “topological key points” (such as intersections and turning points), and whether the distribution can be characterized by quantitative measures including entropy, bias, and scale.

Can human attention be used as a training signal for machines? I explore how gaze can be mapped into world coordinates and semantic maps, how to define attention alignment metrics between humans and models, and whether alignment can improve safety and interpretability in downstream decision-making.

Quantitative metrics under study:

- Spatial entropy

- Topological anticipation distance

- Semantic bias index

- Attention alignment score

Wearable Eye-Tracking in the Wild

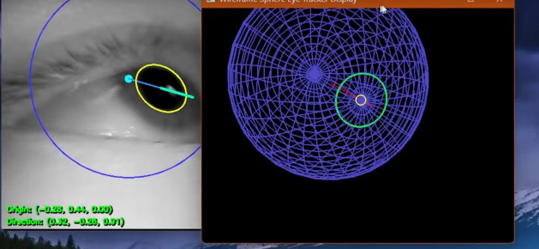

The system is designed for mobile data collection: it records eye images and estimates gaze, with explicit synchronization between eye cameras, scene cameras, and external sensors, enabling gaze projection into 3D reconstructions and semantic maps.

Left: eye tracking monitoring snapshot. Right: prototype overview (design sketch and 3D-printed assembly).

Video source reference: YouTube.

Reference Devices and Why Open Hardware

Commercial wearable eye trackers (e.g., Tobii Pro Glasses 3 and Pupil Labs Core/Invisible) offer strong performance; however, their proprietary pipelines limit algorithm-level transparency and experimental flexibility for mechanism-oriented research. For work that targets mechanisms rather than a single application, control over calibration, error propagation, and data interfaces is essential.

This is why I decided to build an open system from scratch, prioritizing transparency, extensibility, and reproducibility.

Images: Tobii Pro Glasses 3 (official page), Pupil Labs (documentation).

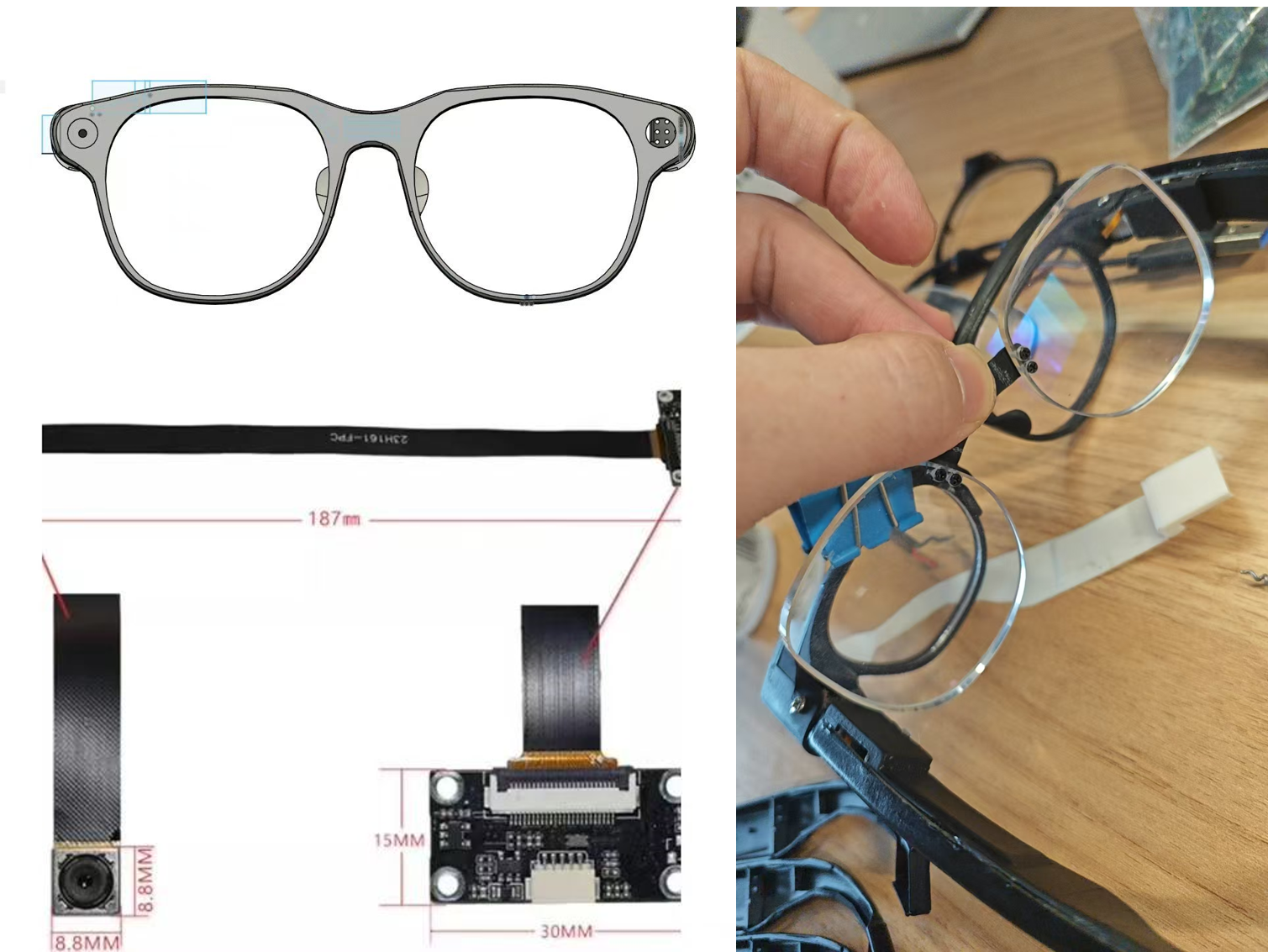

Our Approach: Open Mobile Eye

The core idea is to use cost-effective miniature infrared cameras, a lightweight 3D-printed frame, and open algorithms for pupil detection and gaze estimation. The system is also designed with interfaces for future integration with vision-language models for semantic mapping.

For the algorithmic foundation, I refer to open eye-tracking research frameworks (e.g., works by Jason Orlosky and collaborators), and adapt the pipeline to mobile scenarios with explicit calibration and synchronization considerations.

Human–Machine Attention Alignment

Beyond recording gaze points, the project aims to map attention into world coordinates and semantic space, so that human attention can be compared with model attention in a shared representation. Alignment is defined not merely as spatial overlap of saliency maps, but as structural consistency in how information is prioritized in space and time. This supports the definition of alignment metrics and enables controlled studies on how alignment relates to safety and interpretability.

Visual attention example video used for alignment and qualitative evaluation.

Performance Targets and Current Status

The objective is to achieve sufficient accuracy and temporal stability for spatial-structural analysis, rather than absolute commercial-grade precision. The current prototype is designed for stable time synchronization, lightweight wearing experience, and long-duration data collection, with all data and processing steps intended to remain open.

In ongoing tests, the prototype already supports typical scenarios such as urban walking, indoor navigation, target searching tasks, and initial human–machine attention alignment experiments.

Next Steps

Near-term work focuses on improving calibration procedures, optimizing infrared illumination and mechanical design, integrating semantic mapping with modern vision models, and preparing a public release of hardware files and code for reproducible experiments.